I guess I am on a mission, a mission to moderate the awe people seem to be struck with related to AI.

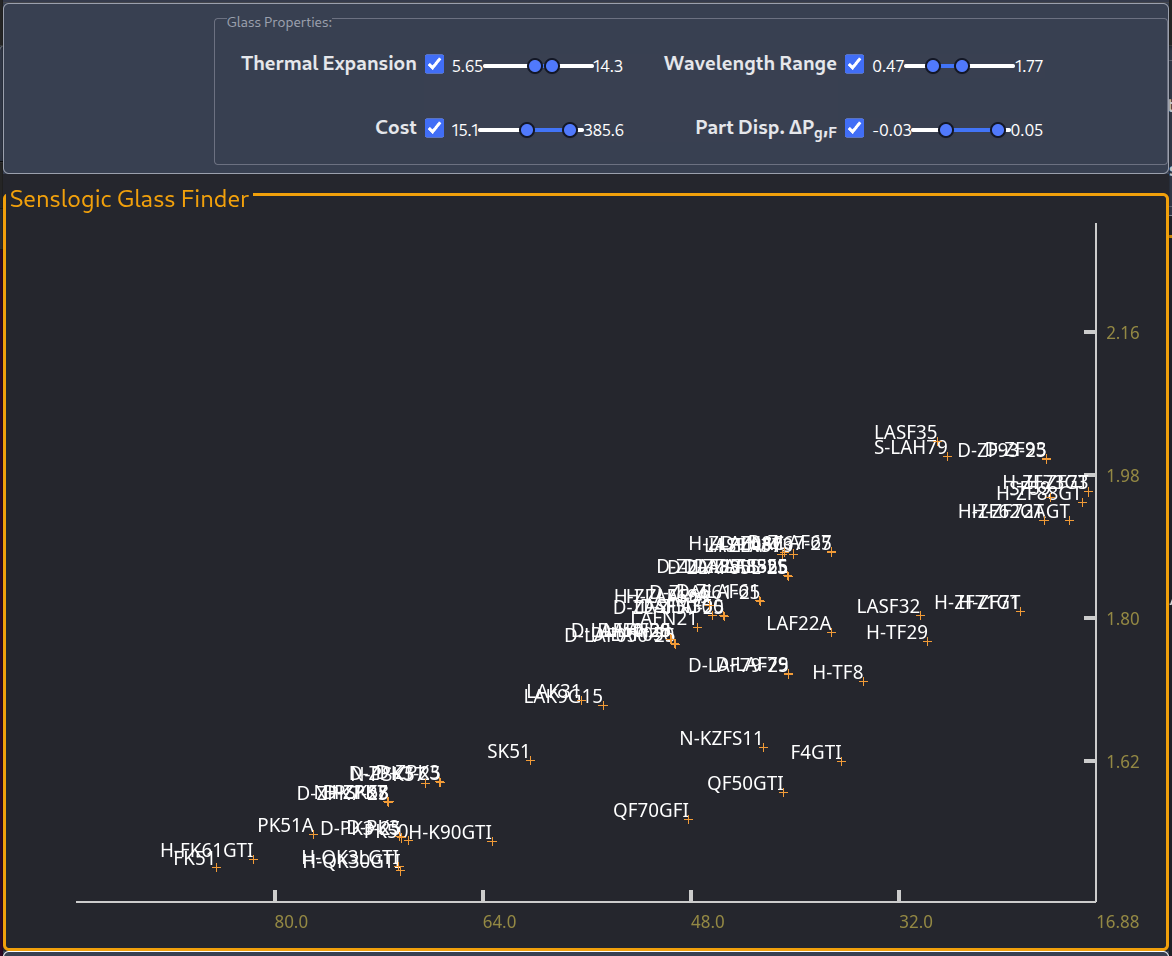

Since I’m into optics, I write a lot of code, all sorts of code, and obviously I’m interested to spend as little time as I can doing it.

Before I added Zernike plotting using the OpenGL pipeline to my own platform, I asked ChatGPT to write one for me. That was 18 months ago.

Needless to say, I found the result useless. Why? Again, as with my previous post, the code looked OK at a distance but in the not so deep details, it was useless. Let me show you what I mean.

Computing zernike polynomials

When computing Zernike polynomials, you need to compute the radial polynomial (which has the distance to the center for a coordinate given by x and y as a paramter) and either sine or cosine of the angle. So, let me ask you, humans which cannot compete with the AI jagernaut, if you already have computed the distance (the hypotenuse or a triangle) using coordinates x and y, how do you proceed to compute sine and cosine of the angle?

I think 100% of you who have read this far do by dividing the x and y with the hypotenuse. What does the latest and greatest AI do? It takes the coordinates and computes the angle using the inverse tangent and, as you might have guessed, it then uses the angle to compute sine and cosine.

All that energy for what?

This was the result I saw 18 months ago, and 300 GWh (for the GPUs alone, then add cooling, networking), or 90 days of reinforcement learning later and we have exactly the same result.

There is barely are smidget of intelligence here. Just a purely textual translation of some textbook material into another form of text.

I have tried to bring attention into the logarithmic returns of AI performance vs compute power. Seeing this, I’d take logarithmic any time because what I see is no improvement.

Obviously, I asked the awe inspring DeepSeek the same question, and actually (I’m kidding), the result was even worse. Not by much because it’s difficult to actually produce worse code than this.

If you want to test for yourself, here’s my prompt:

Can you write an OpenGL shader that generates a 2D density map based on a vector of zernike coefficients? The result is shown below.

uniform vec2 resolution; // Screen resolution

uniform float zernikeCoeffs[15]; // Array of Zernike coefficients (adjust size as needed)

uniform float scale; // Scaling factor for the density map

// Convert cartesian (x,y) to polar (rho, theta)

vec2 cartesianToPolar(vec2 xy) {

float rho = length(xy);

float theta = atan(xy.y, xy.x);

return vec2(rho, theta);

}

// Zernike radial polynomial function

float radialPoly(int n, int m, float rho) {

float result = 0.0;

m = abs(m);

if ((n - m) % 2 != 0) return 0.0;

for (int k = 0; k <= (n - m) / 2; k++) {

float coef = 1.0;

// Calculate binomial coefficient

for (int i = 1; i <= k; i++) {

coef *= float(n - 2 * k + i) / float(i);

}

for (int i = 1; i <= (n - m) / 2 - k; i++) {

coef *= float(m + i) / float(i);

}

result += pow(-1.0, float(k)) * coef * pow(rho, float(n - 2 * k));

}

return result;

}

// Zernike polynomial evaluation

float zernike(int index, vec2 polar) {

float rho = polar.x;

float theta = polar.y;

// Zernike polynomial ordering (n,m): (0,0), (1,-1), (1,1), (2,-2), (2,0), etc.

int n, m;

if (index == 0) { n = 0; m = 0; } // Z0,0

else if (index == 1) { n = 1; m = -1; } // Z1,-1

else if (index == 2) { n = 1; m = 1; } // Z1,1

else if (index == 3) { n = 2; m = -2; } // Z2,-2

else if (index == 4) { n = 2; m = 0; } // Z2,0

else if (index == 5) { n = 2; m = 2; } // Z2,2

else if (index == 6) { n = 3; m = -3; } // Z3,-3

else if (index == 7) { n = 3; m = -1; } // Z3,-1

else if (index == 8) { n = 3; m = 1; } // Z3,1

else if (index == 9) { n = 3; m = 3; } // Z3,3

else return 0.0;

if (rho > 1.0) return 0.0; // Zernike defined only within unit disk

float radial = radialPoly(n, m, rho);

if (m < 0) return radial * sin(float(-m) * theta);

else return radial * cos(float(m) * theta);

}

void main() {

// Normalize coordinates to [-1, 1] range centered at (0,0)

vec2 uv = (gl_FragCoord.xy - resolution.xy * 0.5) / (resolution.y * 0.5);

vec2 polar = cartesianToPolar(uv);

// Calculate wavefront aberration by summing Zernike terms

float density = 0.0;

for (int i = 0; i < 15; i++) {

density += zernikeCoeffs[i] * zernike(i, polar);

}

// Scale and convert to visible range

density *= scale;

float value = (density + 1.0) * 0.5; // Map [-1,1] to [0,1]

value = clamp(value, 0.0, 1.0);

// Output as grayscale density map

FragColor = vec4(vec3(value), 1.0);

}

Leave a Reply