Intro

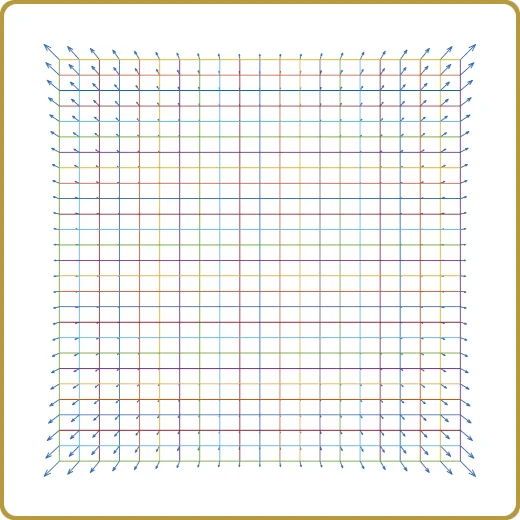

For most of my career in optics, I have been simulating imaging of spatial light modulators, and mostly, SLMs illuminated with partially coherent sources. Usually, the FFT makes a fairly swift job of it, for a single image at least. Sometimes, though, One needs to do a lot of simulations. One such example is when working on the optical requirements for an optical system. An approach that turned to be useful is to use Latin Hypercube Sampling. For an optical system, one would probably include up to 5th order aberrations, maybe a little more and this becomes a rather large simulation. Maybe 1000 or 2000 images. Then, maybe you look at the data. Perhaps the probed space (range) was too small, or too large, and one may want to redo those simulations a couple of times.

Although it is the computer doing it, it is not always easy to schedule in some other task, and even if there is, task switching on a half-hour or hour (or maybe more) basis is not very productive. Therefore, to be able to compute two-dimensional FFTs fast matters, and matters quite a lot.

The Structure of an Image Simulation

The approach to partially coherent image simulations usually depends on if you plan to compute lots of images using one specific distribution of sources, then maybe your approach to this would be SOCS (Sum Of Coherent Systems), or If you want to look for a good intensity distribution, the the overhead with SOCS is not worth it and the approach will be to just compute the inverse transforms of the amplitudes reflected by your mask or, as was usually the case for me, SLM. Each of these inverse transforms represents an image whose intensity must be summed up in order to find the full exposure.

Depending on the size of the periodic domain, and the pupil fill-factor of the illumination (usually called sigma), we could easily end up with needing to do several hundreds of inverse transforms. At this point, one can decide to do a coarser sampling of the light source, but I would not go significantly below 100 illumination points. If we go down to 25 (for example), there will definitely be visible contributions from the periodicity of the coherence function. Even 50 is pushing so a a rule-of-thumb, 100 is not a bad number. Sometimes, the sampling density is given by a homogenizer so there is not much more we can add to that.

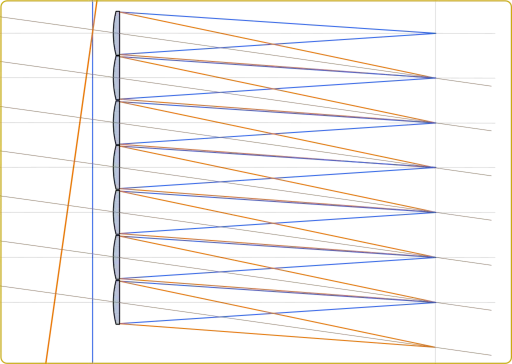

So, to sum it up, the basic image simulation I will talk about here consists of one forward transform to compute the amplitude that illuminates the system pupil, and a slew of inverse transforms which are just trivial translations of one transform, to compute the partial images. There is some pupil filtering in between but that is a computationally very cheap operation.

CUDA API

At first, the CUDA API may seem a little intimidating, and even with my rather substantial time doing these things, I was a little anxious how much pain I would have to put myself through before I exit the learning curve. What complicated matters somewhat was the fact that I wanted code that could be used together with Octave or Matlab and I wanted it in C. This code must also return identical results when compiled for user that do not have access to CUDA.

To my surprise, CUDA was much easier to get into that I anticipated, and things that I was worried about, like having to reboot an endless number of times because I managed to lock up my graphics card never happened.

A few strategic choices

The first decision point regarding the API was, should I use the CUDA FFTW-wrapper library or back-off a little and use the cuFFT library directly. If you have a stand-alone application based on the FFTW-library, then maybe it is a choice, even if there are some obvious things that this wrapper just cannot do, such as allocating both device and host buffers for your data. In the CUDA lingo, “device” memory is on the GPU and host memory is bound to you CPU.

With Octave/Matlab, there’s one more thing, which is that using the CUDA FFTW-wrapper library will create name conflicts between FFTW and identically named functions in the wrapper library. Even if we decide to provide implementations to all Octave/Matlab FFT variants, we still won’t know if there isn’t some binary somewhere expecting to get a plan from fftw_plan_dft_2d, and while the wrapper library provides such a function, the libraries are not binary compatible. Therefore, using the conversion guide to cuFFT is a good idea.

The Most Basic Solution

So, let’s start lite. What is the bare minimum we must do to run FFTs on the GPU? Actually, that does not require much. We must

- Allocate GPU memory – device_mem = cudaMalloc(n);

- Make a plan – cufftPlan2D(&plan, n1, n2, CUFFT_Z2Z);

- Copy host data to the GPU – cudaMemcpy(device_mem, host_mem, n, cudaMemcopyDefault);

- Execute the plan – cufftExecZ2Z(plan, device_mem_in, device_mem_out, CUFFT_FORWARD);

- Bring the result back – cudaMemcpy(host_mem, device_mem, n, cudaMemcpyDefault);

I am obviously quite sloppy here, but moving along these lines will indeed let us to perform the FFT on the GPU, but it would also be a bit of a disappointment. Most of the time would be spent copying data to and form the device. Chances are good (if good is the right word even) that we would not notice that the GPU does anything at all, except that it would actually compute the correct result.

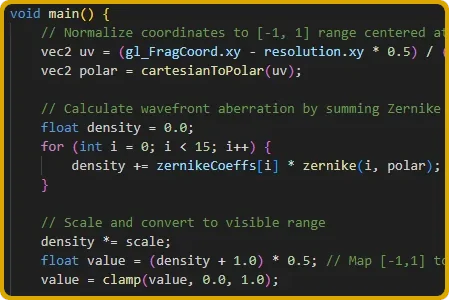

So, what was it that we needed to do with the data we copied back from the GPU? The only thing we needed to do was to compute the product of the transform and its conjugate for each sample and accumulate the sum until we are done scanning the illumination sources. If we could do that on the GPU, then at least we would not have to read back the result more than once. This means that we must learn how to execute some trivial code on the GPU.

A piece of code that would do that is:

__global__ void _launchAddIntensity2Df(float *img, const cufftComplex *amp, float factor, int n)

{

int idx = blockIdx.x * blockDim.x + threadIdx.x;

if (idx < n) {

float re = amp[idx].x;

float im = amp[idx].y;

img[idx] += (re * re + im * im) * factor;

}

}

extern "C" void addIntensity2Df(float *img, const cufftComplex *amp, float factor, int n) {

int blockSize = 256;

int gridSize = (n + blockSize - 1) / blockSize;

_launchAddIntensity2Df<<<gridSize, blockSize>>>(img, amp, factor, n);

}

The prototype for addIntensity2Df has to be exported to a header and can be used from regular C code. After the main loop over sources is done, we will have to read back the accumulated intensity and free a whole lot of buffers.

A block size of 256 worked out quite well, but this is tunable. It’s a good idea to put pieces of code like this in it own .cu file and compile it with nvcc. This allowed us to compile the remaining standard C-code with it’s usual optimizations using GCC or Clang. And there is no problem then linking it all to a .mex file and use with Octave.

The CUDA API offers many ways to address this simulation in order to achieve better GPU utilization, such for example streams but even with this simple implementation, we can achieve 70% GPU utilization for large simulation domains (for example 2048×2048 sampling points). There is, however, little to be gained to implement an alternative to FFTW for a single transform. The overhead almost completely consumes the gain of doing the computation on the GPU.

Worth the Effort?

So, was this worth it? My main GPU is a Ryzen 7 8700G. The code I usually run has been optimized for this type of CPU for quite some time to get most out of the 8 cores. The GPU that I wanted to compare it with is an RTX 3070Ti, not a speed daemon even by the standards of the days when in was released. Still, the results show it computes partially coherent images about 10 times faster than this somewhat recent CPU. It’s a nice speed-up for a first attempt. Not so easy to find a 10 times faster CPU at any price.

To keep this humming at decent speed, one obsoletely has to keep down the amount of data transferred per loop. Since this was a minimal effort based on code optimized for a CPU, I was rather reluctant to convert too much code to run on the GPU. Luckily, one only has to copy the part of the transform that gets through the pupil, which is a very limited amount of data to copy. Perhaps in some future review, I will move more of the code to the GPU and then all per iteration copies will be eliminated. There may be another 60%-70% speed gain to be had and once all the small bottlenecks are eliminated, a 5070Ti might really shine.

Looking back at it, computing partially coherent images is a perfect fit for a GPU. The large number of transforms that need to be done can be kept on the GPU and and accumulating the intensity is really simple, and the GPU does a fantastic job of it. From the top of my head, I cannot find an application that gives so much improvement with so little effort.

Leave a Reply