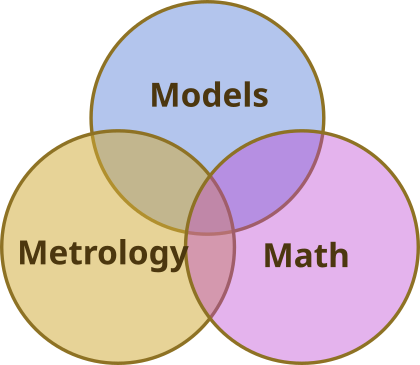

This Tech-Talk is about technology development in general, but perhaps more specifically, incremental technology development. It is obviously influenced by my own experience in the optics industry where the optical module is but one component in a larger system combining diverse engineering disciplines ranging from mechanics, optics, mathematics and software.

Expectations – make sure to mange them

Not all simple things are in fact simple. Make enough simple things dependent on each other, and the result is often no longer simple. This is often the case for system integrators who may outsource or acquire high-end components, add their own niche technology and market knowledge to assemble complex products.

Eventually, the question becomes, do we meet the expectations? What expectations? If we are in a mature market, expectations are fairly well known. Sometimes, expectations are set by the fundamental physical limits. Many mature products operate at some small factor above limits set by fundamental physics. Your phone is one such example. Already 25 years ago, phone receivers operated not that many dB above the noise limit set by temperature and Boltzmans constant.

However, some products are still in the process of reaching these limits. In the name of due diligence, shouldn’t every product owner know where their product stands relative to fundamental limits? As products (in the high-end segments) mature, they tend to get there.

The fog of var

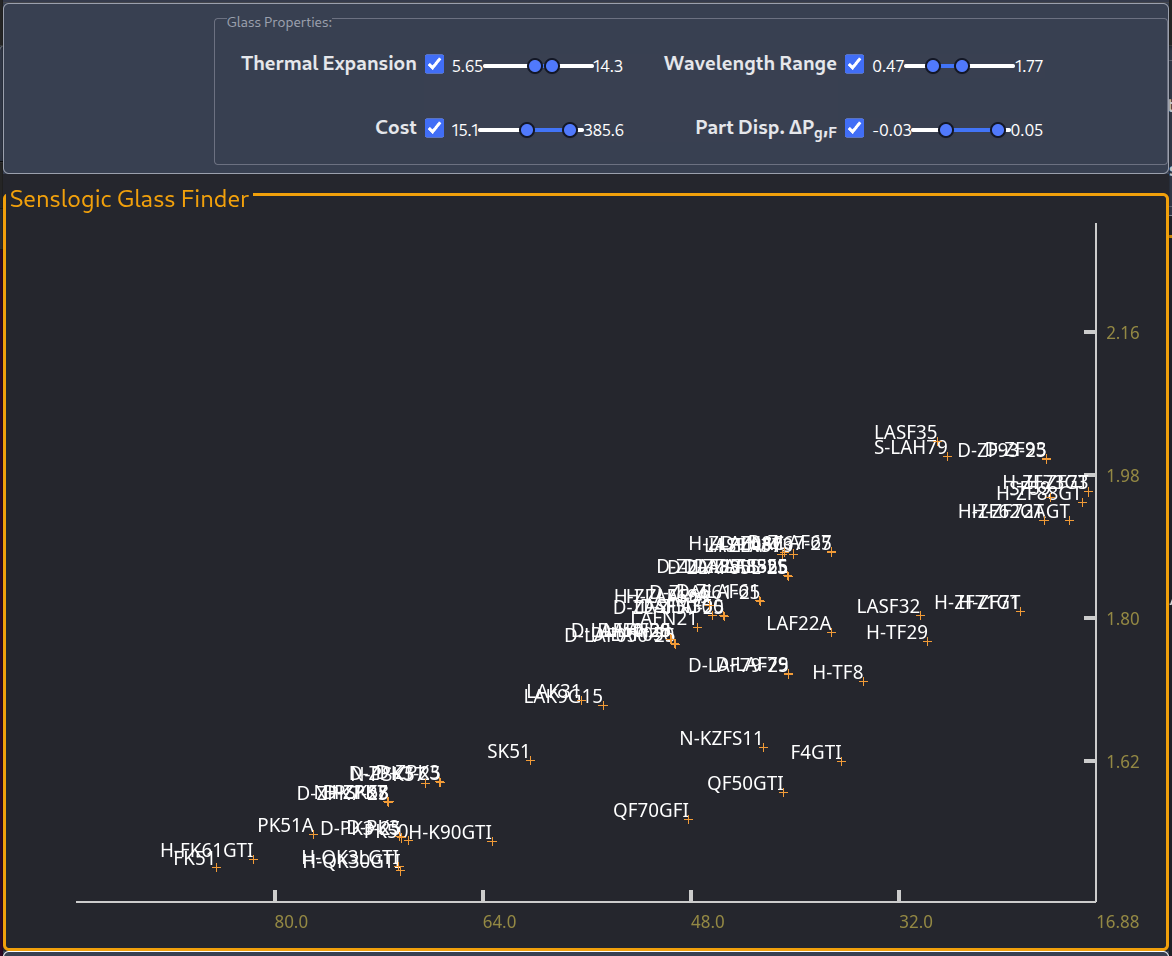

No, I didn’t spell that wrong. You read that correctly. So what do I mean by that? This tech talk is about system integrators and in this context, that would mean, someone that assembles a product with many moving parts which the final result depends on. As engineers, we know about calibration. This allows us to compensate for some fairly complex phenomena as long as they are repetitive, be it in time or space. For this reason, the quality of many products is measured in how far, or rather, how close, we follow the nominal target on average. The measure for this is usually the variance, but since we like to talk primary variables instead of squared ones, we prefer to take the square root and instead we talk about standard deviations. Nevertheless, the underlying measure of quality emerges from a sum of squares of (often) independent phenomena.

The incrementor’s dilemma

This is not a dig at Clayton Christensen, but it is about the pros and cons of incremental engineering, especially in the context of system integrators. When we are measured by a quality number based on a variance, the knowledge of what ails our product can be dramatically blurred because of the nature of a large sum of squared errors, eventually represented as a standard deviation. Say we jump into a project and completely squash one of those errors, and in the end, all that effort was rewarded by a 3% improvement of the standard deviation by which we benchmark our product. That is not a fun meeting with the management. We spent 3 or 6 months of profit on a marginal performance improvement. Won’t happen again.

What now then? Do we stop improving? Did we hit the physical limits? This is where we need to know exactly how and why the products we build perform. Quite substantial errors can be hidden inside a variance and, if we don’t do the details, we won’t know which one to target first until we’ve managed to squash slews of minor contributions that used hide it. And there is a risk we will never get the chance to get there.

An anecdote

I have a story here from my own past, the calibration a tilt-mirror spatial light modulator (SLM). Given how simple that may seem today, it was considered difficult at the time. To solve it, I built a model containing what I thought would be the relevant variables. Tested various ideas, and eventually, one of them panned out.

However, my approach was met with scepticism. The machine did not perform to expectations, and I received a fair amount of blowback. However, since I did pay attention to detail, I knew all intermediate results during the calibration process were perfectly aligning with model expectations. And here lies the first lesson, to keep track of our expectations. Therefore, I didn’t need to keep my head down too much, the problems were not in the algorithm. It took almost three years for the SLM charging and mechanical drift problems to be solved, and lo and behold, the algorithm started to deliver the results anticipated in the model.

Having a detailed enough model turned out to be crucial during this development. It took less than two weeks to write, and that was even my first attempt. Worth every second. I am quite sure that without it, I would have folded under pressure trying to solve problems that were not mine to solve.

The takeaway

So, what is the takeaway from all this? From my early days 25 years ago, all the way until as late as last week, I am reminded of the value of knowing what the result should be. How the product should perform. Whatever I have developed, it has been my goal to know how the product performs before it is built. Not always possible, but the ambition produces invaluable insights. Sometimes there are no shoulders of a giant to stand on. When short on giants, build models, models detailed enough to capture the complexities of your product, so you know what to expect when you turn on the light.

Leave a Reply